AI Power Consumption

It has long been known that with a human brain weighing only 2% of the total body weight, it consumes up to 20% of the energy it produces. And what about artificial intelligence in this regard? Recent studies have shown that by the end of this year, the energy consumption of AI systems may exceed the appetites of even such "voracious" customers as mining farms.

Everything is explained by the rapid development of AI, which is accompanied by the construction of new data centers equipped with special equipment, for example, from Nvidia and AMD.

AI Power Consumption

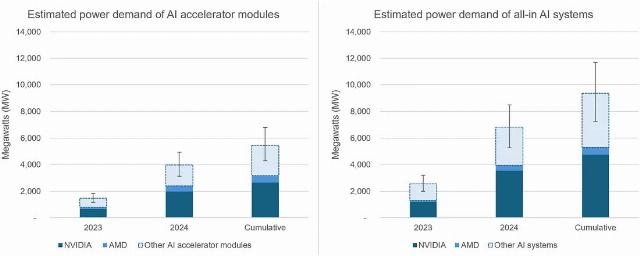

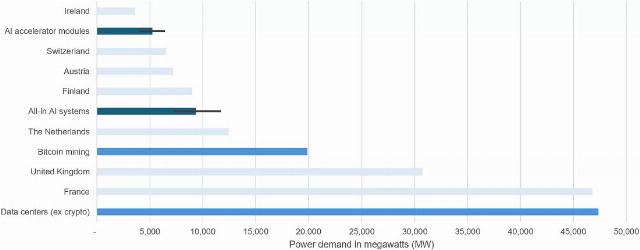

And if today AI systems account for only about 20% of all energy consumed by data centers, then in 2026 this figure will grow to 50%. For example, each Nvidia H100 AI accelerator continuously consumes 700 watts of energy when running complex models, and there are several million such accelerators in the system. Alex de Vries-Gao, an employee of the Institute for Environmental Research at the University of Amsterdam, estimates that AI equipment manufactured in 2023-24 currently consumes 5.3-9.4 gigawatts of energy, which is more than the total consumption of the whole of Ireland. At the same time, technological AI giants are in no hurry to publish information about the energy consumption of their data centers, which makes it difficult to assess the environmental consequences of their work.

AI Power Consumption

Alexander Ageev