It seems that Tesla has acquired one of the most powerful supercomputers in the world. At the moment, the installation has a performance of 1.8 exaFLOPS, and the bottom line is that this is only a third of the future Dojo supercomputer.

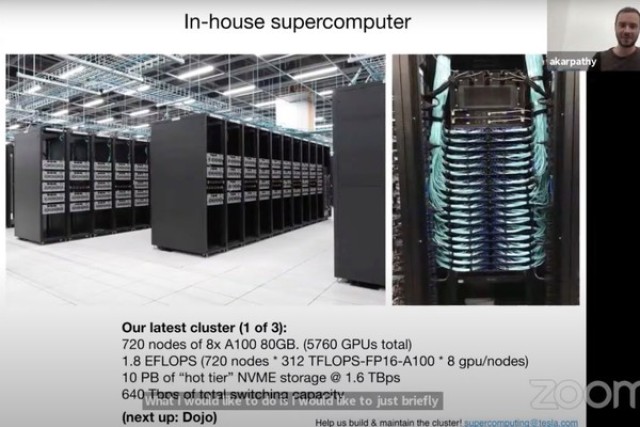

Now the system includes 720 nodes, each of which contains eight Nvidia A100 accelerators. That is, a total of 5760 adapters. It is worth noting that the above performance of 1.8 exaFLOPS is the performance of tensor cores in half-precision calculations (FP16). For FP32, this figure is already half as low. But the fact is that this is still not the performance that is usually evaluated by supercomputers.

The typical Nvidia A100 performance in FP32 is 19.5 TFLOPS. Thus, for the current Tesla system, we are talking about 112.3 TFLOPS, and these are the values according to the specifications of the Nvidia cards. The supercomputer has yet to pass the High Performance Linpack (HPL) test to take its place in the TOP500, but so far it is aiming for third, according to the November rating. The head of Tesla AI development Andrey Karpaty (Andrej Karpathy), in turn, suggested the fifth place.

But it is worth remembering that the current version of the Tesla system is only the third of the final configuration of the Dojo, so that in the end, the company may have at its disposal the second most powerful supercomputer in the world.