The material took the first place among the articles of the day on the Hugging Face portal, overtaking the work of Moonshot AI and joint research by Chinese and American universities.

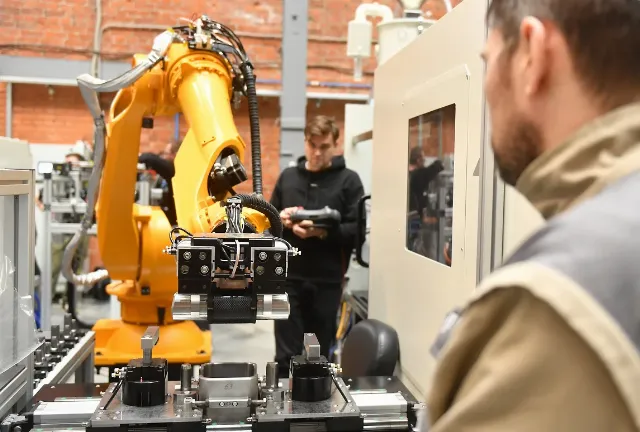

On the eve of Russian Science Day, Sberbank published the Green-VLA technical report on the development of a key technology of physical artificial intelligence (Physical AI) — Vision–Language–Action (VLA) models that allow robots to understand the world around them, interpret instructions and turn them into meaningful physical actions. The material took the first place among the articles of the day on the Hugging Face portal, overtaking the work of Moonshot AI and joint research by Chinese and American universities.

Green-VLA, built on the basis of the Gigachat neural network, describes a practical approach to training such models — from basic training to configuring robot behavior in real conditions. The focus is not on a single demonstration, but on a holistic methodology that can be used by researchers and engineers to create reliable robotic systems.

Physical AI is a dynamically developing field. Modern robots demonstrate a wide range of capabilities, but the key tasks for their further progress remain to increase stability, ensure cross-platform interaction and perform complex multi-stage operations. Green-VLA offers a systematic approach to solving these problems. It is based on measurable and engineering-proven principles of learning robot control systems.

The effectiveness of the approach has been confirmed by SOTA results both in practice and on the international benchmarks Simpler Fractal and Simpler widowX (Stanford University and Google), as well as CALVIN (University of Freiburg).

Andrey Belevtsev, Senior Vice President and Head of Technological Development at Sberbank, said: "VLA technology is becoming the brain of physical artificial intelligence: Vision Action Language models transform vision and language into an executable action. It was these solutions that helped us make our own AI robot. At Green-VLA, we show how to make this layer engineering reliable — with portability between robots and behavior alignment through reinforcement learning, so that the model works not only in demos, but also in reproducible scenarios and benchmarks. Sber plans to share its best practices for the development of the domestic ecosystem of AI and robotics, providing researchers and engineers with a tool for creating innovative solutions."

The Green-VLA model is considered as the next step towards the formation of the Physical AI technology stack, in which VLA models become a link between perception of the world, understanding tasks and physical action. This approach paves the way for creating more autonomous, sustainable, and versatile robotics solutions.

Green-VLA is positioned as an open learning methodology, rather than a ready-made universal controller for robots. The architecture of the solution assumes a basic pre-training stage followed by adaptation to the target robotic system, which determines its flexibility and potential for scaling.