The media learned about the idea of creating a testing ground for AI systems for security

For high- and critical-risk artificial intelligence (AI) systems, you will need to obtain a certificate of compliance with the security requirements of the Federal Service for Technical and Expert Control (FSTEC) and the FSB. This is reported by RBC with reference to the government's draft law "On the use of artificial intelligence systems by bodies within the unified system of public authority and amendments to certain legislative acts."

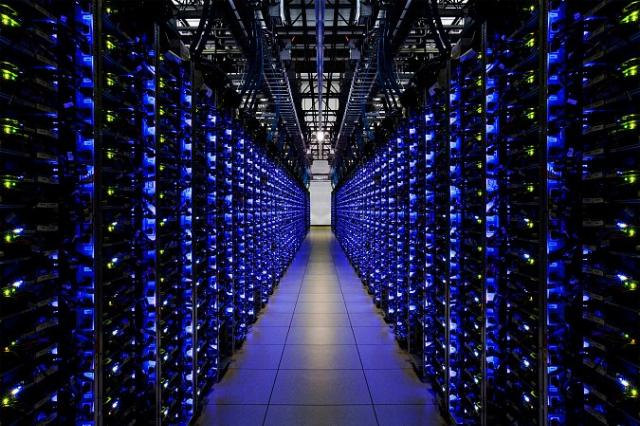

Testing for compliance of AI systems with these requirements will take place at a special training ground created by the Ministry of Finance, a source familiar with the details of the initiative told RBC, and another source in the IT industry confirmed. If the testing is successful, the systems will be approved for use in critical information infrastructure facilities (this includes communication networks and information systems of government agencies, energy, transport, financial, telecommunications and a number of other companies). According to another source, information security market players came to the ministry with a proposal to create a testing ground, but he did not discuss the details.

Information technology

ikt-35.ru

The new bill introduces four levels of criticality of AI systems:

- minimal, when the systems do not have a significant impact on rights and security (for example, recommendation systems);

- limited, when systems must inform users that they are working with a neural network;

- It is high when the systems are used at critical information infrastructure facilities.;

- critical, when systems threaten human life or health, the security of the state, as well as developments capable of making decisions without human intervention.

A new structure, the National Center for Artificial Intelligence in Public Administration under the government, will determine the level of criticality of a particular system. The center will also keep a register of systems approved for critical information infrastructure. The requirements for obtaining a mandatory FSB and FSTEC certificate for critical and high-level AI systems will be established later in a separate document, one of the interlocutors explained. One of them will be the presence of an AI system in the registry of Russian or Eurasian software.

In addition, the bill introduces a ban on the use of AI systems on critical information infrastructure, the rights to which belong to foreign entities. Such measures, according to the authors of the document, will ensure technological sovereignty and protect against the risk of transferring valuable data to the special services and intelligence of unfriendly countries.

When asked about the AI testing ground, a representative of the Ministry of Finance only replied that "the ministry is not currently developing a bill" related to such a testing ground, declining to comment further.

According to the Smart Ranking analytical company, in 2025, the volume of the AI market in Russia increased by 25-30% and amounted to 1.9 trillion rubles. At the same time, 95% of revenue from AI monetization came from the top 5 companies: Yandex (500 billion rubles), Sber (400 billion rubles), T-Technologies (350 billion rubles), VK (119 billion rubles) and Kaspersky Lab (more than 49 billion rubles).