Data analysis has become a core instrument in modern training and simulation systems. In military, industrial, and technical environments, performance is increasingly assessed through measurable indicators rather than subjective observation. Simulation platforms are now expected to record actions, timing, and outcomes with high precision.

This approach is not limited to defense. Civilian competitive environments have developed parallel systems that rely on continuous data collection and analysis.

Simulation and Analytics in Modern Training

Structured Environments and Measurable Behavior

Modern training simulations are built around controlled environments where decisions and reactions can be recorded. These systems track accuracy, response time, consistency, and adaptation under pressure. The purpose is not realism alone, but repeatability and comparability.

Such systems allow analysts to evaluate performance trends across multiple scenarios rather than isolated exercises.

The Importance of Large Data Volumes

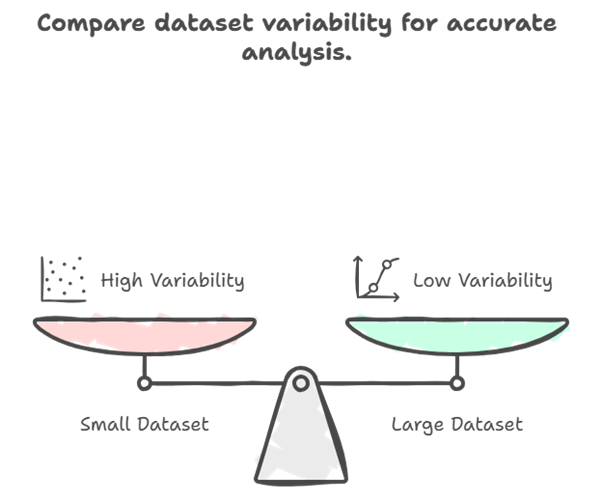

The effectiveness of analytical models increases with data volume. Larger datasets allow more reliable pattern detection and reduce the influence of random variation. This principle applies equally to technical simulations and other competitive systems that generate frequent, structured interactions.

|

| Compare dataset. |

| Источник: Program |

Competitive Gaming as a Data Source

Civilian Competitive Platforms

Competitive digital gaming produces large quantities of structured performance data. Each match records player actions, timing, success rates, and outcomes. Millions of such sessions are generated regularly under standardized rule sets.

From an analytical perspective, this creates an extensive dataset suitable for behavioral analysis.

Public Analytics Tools

Several public platforms demonstrate how this data is processed. Faceit Finder aggregates player statistics and match history across competitive environments. Faceit Analyser focuses on performance breakdowns, progression trends, and statistical correlations over time.

These tools show how raw telemetry can be converted into interpretable performance indicators without manual observation.

Analytical Methods and Practical Implication

Pattern Recognition and Skill Evaluation

By analyzing repeated actions, analytics systems can identify consistent strengths and weaknesses. Reaction delays, decision errors, and performance stability can be measured over extended periods. This approach allows evaluation based on long-term behavior rather than single outcomes.

Such methods are directly applicable to professional training and simulation systems.

Feedback and Adaptation

Data-driven systems enable targeted feedback. Training programs can be adjusted based on measurable deficiencies rather than generalized assumptions. This increases efficiency and reduces unnecessary repetition.

The same principle underlies many modern simulation platforms used in technical and operational training.

Limitations of Data-Centric Models

Despite their advantages, analytical systems have limits. Quantitative data cannot fully capture contextual factors such as emotional state or external stressors. Simulated environments also lack the consequences present in real-world situations.

Conclusion

The development of data-driven gaming analytics reflects broader trends in performance evaluation and simulation design. Civilian platforms provide accessible examples of large-scale behavioral analysis in controlled environments.

As analytical methods continue to evolve, the distinction between civilian competition systems and professional simulation platforms becomes less pronounced. Both rely on the same principles of measurement, comparison, and structured evaluation.